Let's dive into the first significant hurdle of building exclusively with serverless AWS services: DynamoDB.

First, DynamoDB is incredible. You may have heard its tagline: single-digit millisecond performance at any scale. Use it correctly and its performance, reliability, and scalability are unmatched.

We've done the traditional relational database thing for years now. "Never duplicate data," they said. "Model your data and worry about querying it later." "Joins! Ad-hoc queries! Yay!" Not anymore. With DynamoDB, denormalizing and duplicating data is common, encouraged even. And if you don't think about your query patterns before modeling your data, you're going to have a bad time. No joins for you!

There's a lot of noise out there about how DynamoDB works, provisioned vs. on-demand capacity mode, how many partitions you have and when they are allocated, how to avoid "hot" partitions and throttling (or ignoring it entirely because of adaptive capacity), how to choose keys, ensuring keys receive uniform access (this recommendation seems darn near impossible in the real world), how to index data, the best way to model for single-table design. The following is where we landed and how we got there. Want a longer version? Highly recommend Alex DeBrie's The DynamoDB Book. Sadly for me, the book came out after we figured all this out.

Capacity mode

You can choose between two billing modes for reads and writes to your tables: provisioned and on-demand. I'm not going into the details on these modes here, as there is a wealth of info on them elsewhere. Just going to say this: start with on-demand mode.

We use exclusively on-demand mode for now, and I'd highly recommend starting there for nearly any new table. Costs are more than reasonable for our primary database and we want to avoid throttling at all cost. On-demand mode means we just pay the bill for actual usage, never really worry about throttling, and punt provisioned mode cost optimizations down the road. Maybe someday it'll be worth the effort.

Partitions and keys

Properly partitioning our items for a variety of access patterns was, by far, the most challenging part of adopting DynamoDB. To this day, we're still learning new and creative ways to model increasingly complex data structures efficiently. Our relational brains wanted to skip this or quickly pick keys and move on, not realizing it's the most important part of a NoSQL data modeling process. We had it very wrong before we got it right.

Every item in DynamoDB must have a partition key (PK). DynamoDB uses this key when determining the physical location to store and retrieve each item. Items can also have a sort key (SK) that is unique within that partition key, and items that share a partition key are stored together and ordered by the sort key value for efficient and predictable querying.

I originally thought that each item or collection of items with a unique partition key always lived on its own partition in DynamoDB and therefore enjoyed the potential throughput of 3,000 read capacity units (RCUs) / 1,000 write capacity units (WCUs) per second. Wrong! Items or collections with a unique partition key are stored together on a physical partition, but often share that physical partition with other keys. They share throughput limits too until DynamoDB decides to move keys to another partition. Each physical partition enforces the 3,000 RCU / 1,000 WCU limitation and DynamoDB manages distribution of partition keys across physical partitions. The placement of keys across partitions is completely opaque as it is managed by the DynamoDB service. Similarly, the addition of physical partitions and re-balancing keys across them is fully managed by the service.

New on-demand tables are provisioned with 4 physical partitions, allowing for up to 12,000 RCUs / 4,000 WCUs per second across the whole table. I can't find that written down in any AWS documentation, but Rick Houlihan said it on Twitch once, so that's good enough for me. As more throughput is needed, the DynamoDB service automatically adds partitions and balances your keys across them. If you know you need more throughput right away, create a table in provisioned mode with 100,000 WCUs (which allocates ~100 physical partitions to your table), then switch it to on-demand mode. Don't forget to this switch though or you'll get a gnarly bill! Tables in on-demand mode can always instantly handle their previous throughput high and once a physical partition is allocated it is never taken away.

Poor keying lessons learned

Ready Five is a multi-tenant application, and all data is always scoped to an account. We thought it would be really cool if all data for each account shared a single partition key! Then when an account is deleted we can easily purge the whole collection without worrying about orphaning items. We could easily query for a whole account at once as well, which sounded great for allowing customers to easily export their data. Such newbies.

| PK | SK | |

|---|---|---|

acct_xxx |

team_yyy |

(Team Y) |

acct_xxx |

inc_aaa |

(Incident A) |

acct_xxx |

inc_bbb |

(Incident B) |

acct_xxx |

inc_ccc |

(Incident C) |

acct_xxx |

schedule_ddd |

(Schedule D) |

This worked well until we realized what is now obvious in hindsight. All the read and write throughput for an account, regardless of its size, will be executed on a single partition. 3,000 reads and 1,000 writes per second might sound like plenty for a single customer, but it creates a hard cap that cannot be increased, potentially throttling requests for our biggest customers who rely on our product the most.

Equally important to consider: not all reads and writes are created equal. Physical partitions can each handle 3,000 RCUs and 1,000 WCUs per second, but it's likely that individual read and write requests will consume far more than one RCU or WCU. If the average size of the items you're writing is 2KB, for example, each write request will use 2 WCUs, allowing each partition to accept up to 500 write requests per second. Still a lot, but you can see how overusing a partition key with too much frequently-used data can quickly cause concern for throttling down the road.

Improved keying

Instead of a "this sounds cool" partitioning scheme, we started over, focusing instead on throughput requirements and boundaries of each query pattern.

For example, we determined we need to be able to query all the teams, schedules, shifts, and overrides in a given account at once so we can efficiently calculate who is on call account-wide. Since teams, schedules, shifts, and overrides are not particularly high velocity on either reads or writes, they all live in the same partition key per account (acct_xxx#team).

We always name the partition key PK and the sort key SK. They're generic (they make sense for any type of item) and they're short (attribute names are part of calculated size of each item, so fewer characters is better).

| PK | SK | |

|---|---|---|

acct_xxx#team |

team_yyy |

(Team Y) |

acct_xxx#team |

team_yyy#schedule_ddd |

(Schedule D) |

acct_xxx#team |

team_yyy#schedule_ddd#override_eee |

(Override E) |

acct_xxx#team |

team_yyy#schedule_ddd#shift_eee |

(Shift E) |

This nesting approach on the sort key gives us all the flexibility we need. We can query for all teams, schedules, shifts, and overrides across the account with a PK = "acct_xxx#team" query. We can query for a given schedule along with all its shifts and overrides with PK = "acct_xxx#team" AND begin_with(SK, "team_yyy#schedule_ddd").

Similarly, we know we need to be able to list all users in an account, but never need to access a user's contact methods or notification rules outside the context of the user who owns them. So, user items live at the top level of an account, but contact methods and notification rules are assigned to a partition key dedicated to each user.

| PK | SK | |

|---|---|---|

acct_xxx#user |

user_xxx |

(User X) |

acct_xxx#user |

user_yyy |

(User Y) |

acct_xxx#user_xxx |

cm_yyy |

(Contact Method Y on User X) |

acct_xxx#user_xxx |

nr_yyy |

(Notification Rule Y on User X) |

acct_xxx#user_yyy |

cm_zzz |

(Contact Method Z on User Y) |

Given this partitioning scheme, we can fetch all users in an account with PK = "acct_xxx#user" AND begin_with(SK, "user_"). It's impossible to get contact methods or notification rules with that query, and that's just fine with us. To fetch all contact methods and notification rules for a particular user, we can query withPK = "acct_xxx#user_xxx"or we can fetch just contact methods withPK = "acct_xxx#user_xxx" AND begin_with(SK, "cm_").

Lastly, and most importantly for our application, we'll cover incidents. Incidents are our highest velocity item for both reads and writes. They're also the largest in terms of item size, sometimes exceeding 300KB of customer-supplied data. This creates an interesting dilemma. We want to list incidents account-wide with a single query, but they're sometimes huge. Less than ideal when you can't grab more than 1MB at a time from DynamoDB and you'd run up a solid bill if a primary access pattern is constantly loading that much data.

Here's where we really let go of all the relational knowledge we instinctively cling to. When an incident is created, a predictably small item is created containing its title, number, and a handful of other metadata attributes in a single, account-level partition.

{

"PK": "acct_1eSuXwHoigx1WzTqBimRK2mvvEj#inc",

"SK": "inc_1owPwtJ5JZbNMlR4Cccqm3qC7PH",

"ID": "inc_1owPwtJ5JZbNMlR4Cccqm3qC7PH",

"CreatedAt": 1614194921,

"UpdatedAt": 1614194982,

"Title": "Application error rate exceeded 1%",

"Status": "RESOLVED",

"State": "CLOSED",

"Number": 859,

"...": "..."

}

A separate incident data item is written containing the incident details, which is the part that tends to be large. It uses a partition key dedicated to that incident and does not use any index keys, ensuring it's omitted from indexes.

{

"PK": "acct_1eSuXwHoigx1WzTqBimRK2mvvEj#inc_1owPwtJ5JZbNMlR4Cccqm3qC7PH",

"SK": "data",

"CreatedAt": 1614194921,

"UpdatedAt": 1614194921,

"AccountID": "acct_1eSuXwHoigx1WzTqBimRK2mvvEj",

"Details": "{\"potentially super duper massive\":{\"json\":\"string\"}}",

"IncidentID": "inc_1owPwtJ5JZbNMlR4Cccqm3qC7PH",

"DetailsType": "JSON"

}

This way, we can query for all the smaller summary incident items in an account with PK = "acct_xxx#inc" AND begin_with(SK, "inc_"), omitting all incident details items when loading the collection. DynamoDB read and write capacity units are calculated and billed based on item size, and this ensures we're only loading the massive details item with a separate query when an action warrants it.

Indexes

Querying only your table's partition and sort keys gets you only so far. Ultimately, you're going to want access to your data from different angles. For this, we use indexes.

DynamoDB offers two types of indexes: Local Secondary Indexes (LSIs) and Global Secondary Indexes (GSIs). LSIs allow you to specify a different sort key while retaining the same partition key as the table. GSIs allow you to specify a different partition key and sort key, completely repartitioning the data.

My suggestion: never use LSIs. They introduce a pair of limitations that might bite you down the road.

- They can only be created when the table is created and cannot be modified or deleted later.

- Adding any LSIs to a table limits all item collections to 10GB.

The former is mainly just annoying because of the pressure it puts on getting these indexes correct on day one for a table that you could rely on for a decade or more.

The latter is a foot gun. It means that each collection of items with the same partition key must never exceed 10GB in size. Hard cap, no increases via support ticket. The only way to add items to that collection is by deleting existing items. Until then, writes to full collections are rejected. Combine this with not being able to remove LSIs after table creation and you'll realize you can't get out of your maxed-out partition bind without purging data or moving to another table.

GSIs have neither of these limitations. They can be added and removed anytime and partition size is unlimited. The only real drawback to GSIs is that they're eventually consistent, so you can't guarantee immediate read-after-write consistency. Tradeoffs.

In our case it's hard to imagine a single account maxing out its root incidents partition with 10GB of data, but I don't want to worry about it today or 5 years from now. GSIs for life.

We create nearly all tables with the same key/index schema. The table's key attributes are PK and SK and each of 3 GSIs get dedicated key attributes. We always project all attributes (and always recommend that unless you know you have good reason not to), which means we don't need to follow up an index query with a table query to get all the data. Projecting all attributes costs more than projecting keys only, but the hassle just isn't worth it for a primary datastore.

Here's a CloudFormation template for this schema if you want to deploy a table like this to your own account. We enable time-to-live on an ExpiresAt attribute and enable point-in-time recovery.

| Partition Key Attribute | Sort Key Attribute | |

|---|---|---|

| Table | PK (String) |

SK (String) |

| GSI1 | GSI1PK (String) |

GSI1SK (String) |

| GSI2 | GSI2PK (String) |

GSI2SK (String) |

| GSI3 | GSI3PK (String) |

GSI3SK (String) |

This allows up to 4 different ways to query each item. You can add as many GSIs as you need, but so far we haven't needed more than 3 for any given item. And since the table uses on-demand billing, sparse or unused indexes don't affect cost.

Keeping all keys generic means you can choose whether or not items land on indexes by setting those attributes or not on a per-item basis. We have a few dozen types of items on our main table, but only a handful use GSI2 and GSI3. If GSI key attributes are omitted when writing the item, the item is omitted from the index.

Put it all together

Let's dial this up a notch and showcase a more complex item that is queried multiple ways.

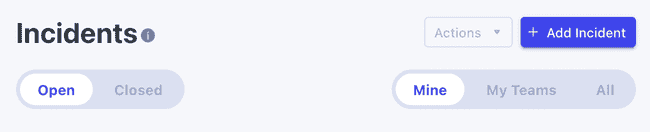

Ready Five allows you to view a list of "My Incidents" and "My Teams' Incidents". My Incidents are the incidents that you created, that have been assigned to you, or those where you've been a participant in some way. My Teams' Incidents are incidents that have been assigned to any of the teams you're a member of.

A quick "participations" join table in the relational world would do the trick, but not here. We'll stick with the notion of "participations", but instead of a join table we'll create an item for each participant. Each participation item is a copy of the root incident item with different table and index keys. Like the root incident item, the incident details are not included.

Here's a sample participation item (some attributes redacted for brevity). Notice both the table and GSI partition keys have the account ID as a prefix. This isn't strictly necessary since all items have a unique ID, but it's an extra security measure we take to ensure no one can ever execute queries for items outside their account.

{

"PK": "acct_1eSuXwHoigx1WzTqBimRK2mvvEj#inc_1owPwtJ5JZbNMlR4Cccqm3qC7PH#incpart",

"SK": "team_1h0QJANrGKFCZHSDO526fs6Jbg8",

"GSI1PK": "acct_1eSuXwHoigx1WzTqBimRK2mvvEj#team_1h0QJANrGKFCZHSDO526fs6Jbg8#incpart",

"GSI1SK": "CLOSED#2021-02-24T19:28:41Z",

"Title": "Application error rate exceeded 1%",

"ID": "inc_1owPwtJ5JZbNMlR4Cccqm3qC7PH",

"ParticipantID": "team_1h0QJANrGKFCZHSDO526fs6Jbg8",

"CreatedAt": 1614194921,

"UpdatedAt": 1614194982,

"Status": "RESOLVED",

"State": "CLOSED",

"Number": 859,

"...": "..."

}

Any time the root incident item is updated, we'll need to propagate those changes to each participation item by issuing a strongly consistent query of the whole partition with PK = "acct_xxx#inc_yyy#incpart", then updating each of the returned items with the changes. Since strong consistency is a requirement for this query, it needs to be a table query (not a GSI query).

Using the participant ID as the SK ensures we never have duplicate participations for a given team or user (table sort keys must be unique within the partition key).

Our user-facing query is the one displaying "My Incidents" or "My Teams' Incidents." Sidenote: we never list all incidents for a participant, we always query them on the GSI by their state - open or closed. So to get "My (Closed) Incidents" we query the GSI1 index for GSI1PK = "acct_xxx#user_yyy#incpart" AND begin_with(GSI1SK, "CLOSED#").

For "My Teams (Closed) Incidents", our application code needs to do a bit more work. The participation items exist in different partitions, there's no way to get them with a single query. We must execute multiple queries (one for each team the user is on), then merge the results together. So we do exactly that. We spin off a goroutine for each team with a GSI1PK = "acct_xxx#team_yyy#incpart" AND begin_with(GSI1SK, "CLOSED#") query, and combine/deduplicate the results before returning them to the client.

Real talk. This was infuriating when building it. Merging and deduplicating then figuring out accurate cursors and pagination from multiple result sets in our business logic felt like a step backwards. In the back of my head I know MySQL could handle multiple-team queries and the engine would handle deduplication and limiting the result count before anything is even returned to the application.

In the end though, this concurrent querying approach works really well and scales infinitely. Like most of the services we'll discuss in this journey series, the all-in Serverless decision means some more work up front for long-term payoffs. And now we have a pattern for this type of query that we're able to use elsewhere with a lot less effort.

Conclusion

There's no doubt that MySQL or PostgreSQL would have been an easier choice for the early days of our product, but being all-in on a database that's fully managed, scales infinitely, never has scheduled maintenance or upgrades, and only bills us for actual usage continues to make us thrilled with our decision to embrace DynamoDB. We can deploy an unlimited number of environments with an unlimited number of tables to any region around the world without thinking about instance sizes, memory allocation, or storage. And with it being pay-as-you-go, these environments can sit unused without billing consequences.

Despite DynamoDB's relatively small API surface area, we're still learning new and interesting ways to model and query data with it. There's a bit of a learning curve with table and index design patterns, but there are now plenty of fantastic resources available to help you get your head around all of its features and best practices.

If you have questions about anything covered here or about DynamoDB, or want to share some tidbits of your journey, ping me on Twitter!

Up next in this journey series we're going to talk about how we're using Lambda and the go1.x runtime for all Ready Five business logic. See you then!